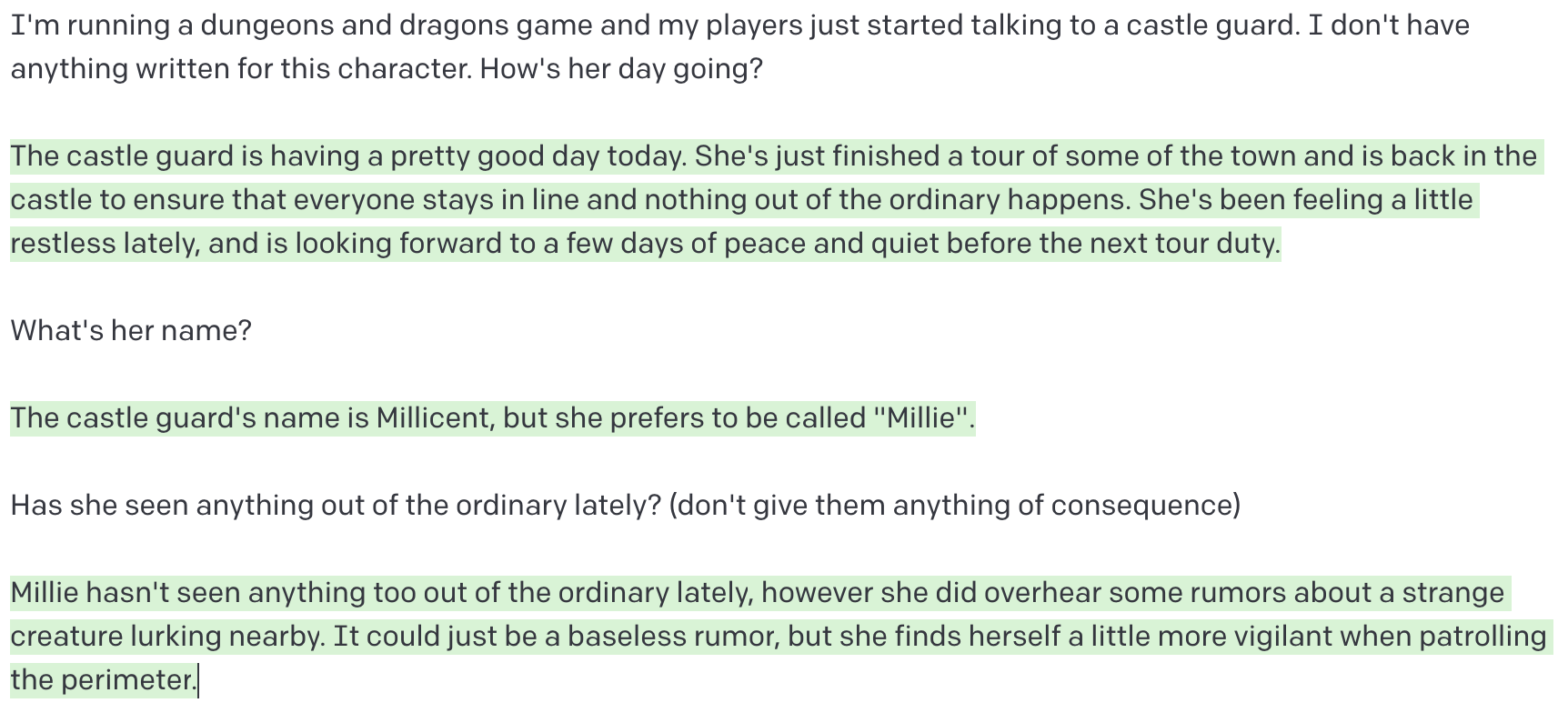

Here’s a fun conversation I had today with ChatGPT:

That’s probably not the first time you’ve seen something hilariously wrong come from a generative algorithm like a large language model (LLM).

Much commentary on LLMs tends to fall to one of two extremes: either they’ll achieve sentience next year or they’re simply a parlor trick. As usual, the truth is somewhere in the middle.

Anyone who claims that LLMs pass a Turing test “if only you give it the right prompts” either doesn’t understand what a Turing test is, or hopes that you don’t. The Turing test specifies that the computer must behave indistinguishably from a human being - if the Turing test allowed for boosters to circumscribe the questions that could be asked to their idea of the “right” ones, the Gutenberg printing press could have passed it.

However, the fact that a new technology doesn’t pass the Turing test doesn’t mean it’s not useful - airplanes, electricity, indoor plumbing, and mobile phones can’t pass a Turing test, either! Even if the technology is simply “glorified autocorrect or autocomplete” - that’s comparing it to a technology that most people and businesses use daily.

I’d like to delve into why they make mistakes like this, what this implies for the limitations of the technology, and what it implies for their actual use cases - i.e., those whose pursuit will likely not be a black hole of time and money better spent elsewhere.

Glorified Autocorrect / Autocomplete

In less technical media, this is frequently positioned as a “criticism” of LLMs. It is, at it’s heart, accurate: they’re both statistical models that predict what “tokens” are likely to come next, given knowledge of what came before.

Autocorrect operates on letters in a single word, autocomplete might operate on a few words at a time; LLMs operate on words across multiple sentences and paragraphs. LLMs rely on neural nets and tons of human-generated training data to make associations that aren’t explicitly programmed in; autocorrect and autocomplete are able to make use of simple dictionaries, and can be bootstrapped from straightforward substitutions.

But the pitfalls of anthropomorphizing either are the same. ChatGPT didn’t “lie” or even “hallucinate” in any sense of those words that would apply to a human. It doesn’t “think” that Dante wrote about radio any more than my phone “thinks” I am “ducking angry” or than a mechanical watch “forgets” about time zones when you travel.

Humans think thoughts - including facts about the world - and then look for words to express them. You and I might disagree over whether a spoon is silver or gray - we may be looking at the same spoon, and choosing different words to describe it. For an LLM there is no spoon, just the word s-p-o-o-n and a statistical likelihood that sentences that start kinda like this end kinda like that. Color is not a property that objects have, it’s a word that has a certain probability of being next in a list of other words. And if it sometimes says otherwise, it’s only because those words are likely to flow from the words you fed it.

There is simply no construct anywhere in the LLM’s code that models the physical world, facts, truth and falsehood - the same way there is no gear in a mechanical watch for the time zone. If the watch is correctly set to US Eastern Time, it doesn’t follow that it “knows” what part of the world it’s in.

Neo was wrong, there is a spoon.

Some AI influencers have taken to claiming that this is exactly how humans think - that we have no concept of a spoon, or of color, outside of those words - that these are emergent abilities which will certainly emerge if we continue to throw vast amounts of money at their companies processing power and training data at the algorithms. That’s not a claim to be taken seriously - that level of understanding of how the human brain works isn’t something neuroscience has.

It’s also a belief that’s not likely to survive the experience of interacting with a child. I’ve tried telling my infant daughter that she can’t possibly conceive of a desire to be rocked, fed, burped, or have her diaper changed right now because she doesn’t know the words for any of those things - she vociferously objected.

What is a fact? And how to model it?

Let’s start at a child’s level of complexity:

The chair is brown.

We're assigning a description, “brown” to a subject “the chair”.

The dog is brown.

The dog is hungry.

So far, so good. Hungry is a different kind of description, but if we apply a broad definition of “description”, it still seems to work.

The dog is running.

That’s not really a description, that’s an action. Still, we can differentiate between those constructions with context clues.

The dog is four.

Four years old. Those words aren’t present in the text, but again we can infer them.

Two apples plus two more is four.

The apples aren’t four years old, but we can infer differently based on context.

Thus far, we’re really just talking about sentence construction. LLMs don’t have a concept of these as “facts” that they map into language, but for examples like these - it doesn’t necessarily matter. They’re able to get these right most of the time - after all, what exactly are “inferences” and “context clues” but statistical likelihoods of what words would come next in a sequence?

The fact that there is no internal model of these facts, though, explains why they’re so easily tripped up by just a little bit of irrelevant context.

I guess it’s 15 feet tall

Can you flunk preschool?

Identity politics

In the last section, I went straight to sentence construction and skipped the harder question. What is “the dog”? To which dog are we referring?

That information simply isn’t contained in the text. You don’t need to know in order to make semantic sense of the sentence, but the sentence is meaningless without it. (“Meaningless”, here, being distinct from either “true” or “false” - we can understand something and proceed before we build a picture of truth and/or falsehood. LLMs emulate the former but not the latter.)

If we have a visual context (i.e. a photograph, or the real world), we can connect “the dog” to an entity in that context. That particular dog is brown; the sentence now conveys an actual fact. If the sentence appears in a children’s book, you can connect “the dog” to an entity in that context, the entity is fictional, but it’s an entity nonetheless. The sentence now conveys a fictional fact; it’s true within the context of the book and meaningless outside of it.

Other technologies - SQL databases, key-value stores, graph databases, file systems, object-oriented programming, etc. - will have a construct in their code or data to represent the dog and its color.

select color from dogs where dogId = 123;C:\Users\Chris\Photos\Pets\Dogs\Fidodog.name = “Peanut”; dog.color = “#795C34”;Facts also can be true at a certain time, but not another. Identity can shift.

Again, playing around with simple examples demonstrates the lack of an internal model.

Of course, we’re only looking at the simplest examples. For more complex examples, even humans might have difficulty forming a coherent internal model.

The Ship of Theseus. Is it the same ship? If so, what defines “the ship”? What’s its essence? If not, when does it become different? Is there a critical mass of replaced planks?

Sean Connery and Pierce Brosnan are both James Bond. Do they both exist in the same universe? Is one canonical and the other not? We never used to think about that before Marvel.

Who’s on First?

This explains why LLMs have the most visible trouble when it comes to humor. Humor isn’t magic; it just leans more heavily on that shared context and understanding that’s not present in the written or spoken words.

Humor frequently relies on exploiting the gap between our understanding of semantic meaning of what’s said, and our understanding of the underlying facts.

Effective Uses

So, where will LLMs be of value? I don’t pretend to have the full picture, but here is an (incomplete) list of starting points:

If they’re “glorified autocomplete” then the most clear example is … in making autocomplete and predictive text better. Moving on…

Any situation in which we need to generate a lot of standard boilerplate or repetitive text quickly:

Some programming

Legal documents

Press releases

Customer service

All of these uses will require some human-on-the-loop verification; these should be seen as “copilots” or “typing assistants”.

I’d recommend asking the semi-rhetorical question “why is this boilerplate necessary?” If the answer is that it isn’t, then get rid of it rather than use an LLM to generate it.

For example, if you find yourself typing the same 3 properties on hundreds of classes, GitHub Copilot will save you a lot of time. However, if you expect to maintain that code, why not just use a superclass or a mixin? The fact that it can autosuggest adding that superclass or mixin to new classes, though, is a real productivity gain.

In my experimentation, the productivity gains are greatest for an experienced programmer working in an unfamiliar language - I might need to Google 10 different things in order to write a simple for-loop in PHP that sets one property on each of a series of objects. Do I want a dot or an arrow here? Generative AI saves me the pain of actually doing those searches, or the significantly worse pain of having to learn PHP - this generally holds true even if the code is spits out does the wrong thing and I need to correct it.

Document understanding:

Screen scraping

Extracting basic information from company descriptions or biographies

Parsing semi-structured data (e.g., resumes)

Sentiment analysis

Most current LLMs will perform poorly on these tasks, compared to more structured approaches which are currently standard. The LLM, however, may present a much faster path to a viable beta, and it’s worth noting that LLMs have a viable path to improvement here with more training data, though the best is walled-off in proprietary databases. It’s extremely unlikely that these will replace traditional ETL code, but they might lower the barrier to entry to getting something workable.

Brainstorming:

Word association exercises

Idea generation

Writer’s block

“All of that stuff that’s too obvious to write down”

Sometimes it’s easier to start with something bad and then critique or fix it, than to start with nothing. It’s not going to generate anything truly original, but neither do dice.

It’s also worth noting, for any and all of these potential use cases, that the output of generative AIs is, for all intents and purposes, neither deterministic nor debuggable. The same input will yield a different output each time.

Even with “temperature” turned down, they’re functionally nondeterministic. Changing an input to a financial calculation by a penny will yield a different result, but different in a way that’s consistent and predictable (and if not, you’ve got a bug to fix). A similarly minor tweak to phrasing, grammar, or punctuation could yield starkly different results from a generative algorithm, and there’s no clear path to identifying or fixing (or often even acknowledging) the problem.

This implies at least one of two things:

The output of an LLM needs to be reviewed and approved by a human who is responsible for its work

The output of an LLM must be “top of stack” - i.e., not used as an API or otherwise fed into any downstream algorithms

Or, quite often, both!

The Dangers

LLMs won’t gain sentience and kill us all, but that’s a low bar for safety.

Deepfakes

The ability to generate convincing fake video of world leaders and world events is a real concern, but it’s probably missing the mark to focus on it. Many of us will be fooled by one or two of these at most before we realize that it’s a possibility and resume an appropriate level of skepticism.

“But then we won’t be able to trust anything we see online”

As opposed to before? Politicians and propagandists have long been adept at both taking their opponents’ words out of context, and at falsely claiming their own words are taken out of context. The key component to misinformation has never been creating a visually indistinguishable artifact.

Technology was always the easy part

In 1938, a radio broadcast of a play - War of the Worlds - created a “deepfake” of an alien invasion convincing enough to stir mass panic. The audio - an anchor reporting the presence of alien vessels and describing them - was identical to what people would have heard had the invasion been real.

You may be aware that that story is apocryphal. The War of the Worlds radio play didn’t actually induce mass panic. The narrative that it did, though, is repeated so often as to be common knowledge - providing us with an example of people succumbing to an even less technologically advanced deepfake, one created by print.

Millennia ago, one could produce a convincing deepfake of Zeus using only the spoken word. Decades ago, one could produce a convincing deepfake of a foreign leader using subtitles.

The hard part of propaganda has always been crafting a convincing narrative, and co-opting or impersonating trusted sources.

Controlling the information space

Which brings us to the more likely danger of LLMs. Today, many of the gatekeepers in the information space are themselves computers (Google’s search algorithm, Facebook’s content moderation algorithms, TikTok’s recommendation algorithm, etc.).

LLMs will likely be very good at generating content optimized to fool those algorithms. If not, they’ll be able to iterate very quickly, and mutate it in ways that make it difficult to detect it was done in an automated fashion. Via sheer mass, they’ll be able to overwhelm the humans who backstop the algorithms.

Think constellations of fake sites linking to a page to drive it up the search rankings. Think armies of realistic-looking Twitter accounts blocking and reporting a governments’ critics. These things aren’t science fiction, they already exist - they’ve just become cheaper.

Next Steps

Building a generalized model of truth may be a bit ambitious for today. Building a domain-specific model of truth, however, is quite realistic. Data firms do this every day. A CRM can say, with certainty, that so-and-so called in to ask about such-and-such on this date, and spoke with their account manager for 27 minutes. A financial platform can say, with certainty, that X firm reported $Z in revenue in Q4 of YYYY.

One path forward is to connect generative AIs to these databases. No amount of specialized factual data sets will produce Lt. Cmdr. Data, but they may allow users to query them using natural language and produce results that can be traced back to a source.

Conclusion

Don’t underestimate the productivity benefits - even at the most pessimistic read, a marginal improvement to autocorrect and autocomplete might save seconds per day for billions of people.

The dangers are worth being aware of - as with any productivity-boosting innovation, it will boost the productivity of the bad actors as well as good.